Effective obfuscation

Silicon Valley's "effective altruism" and "effective accelerationism" only give a thin philosophical veneer to the industry's same old impulses.

As Sam Bankman-Fried rose and fell, people outside of Silicon Valley began to hear about "effective altruism" (EA) for the first time. Then rifts emerged within OpenAI with the ouster and then reinstatement of CEO Sam Altman, and the newer phrase "effective accelerationism" (often abbreviated to "e/acc" on Twitter) began to enter the mainstream.

Both ideologies ostensibly center on improving the fate of humanity, offering anyone who adopts the label an easy way to brand themselves as a deep-thinking do-gooder. At the most surface level, both sound reasonable. Who wouldn't want to be effective in their altruism, after all? And surely it's just a simple fact that technological development would accelerate given that newer advances build off the old, right?

But scratching the surface of both reveal their true form: a twisted morass of Silicon Valley techno-utopianism, inflated egos, and greed.

Same as it always was.

Effective altruism

The one-sentence description of effective altruism sounds like a universal goal rather than an obscure pseudo-philosophy. After all, most people are altruistic to some extent, and no one wants to be ineffective in their altruism. From the group's website: "Effective altruism is a research field and practical community that aims to find the best ways to help others, and put them into practice." Pretty benign stuff, right?

Dig a little deeper, and the rationalism and utilitarianism emerges. Unsatisfied with the generally subjective attempts to evaluate the potential positive impact of putting one's financial support towards — say — reducing malaria in Africa versus ending factory farming versus helping the local school district hire more teachers, effective altruists try to reduce these enormously complex goals into "impartial", quantitative equations.

In order to establish such a rubric in which to confine the messy, squishy, human problems they have claimed to want to solve, they had to establish a philosophy. And effective altruists dove into the philosophy side of things with both feet. Countless hours have been spent around coffee tables in Bay Area housing co-ops, debating the morality of prioritizing local causes above ones that are more geographically distant, or where to prioritize the rights of animals alongside the rights of human beings. Thousands of posts and far more comments have been typed on sites like LessWrong, where individuals earnestly fling around jargon about "Bayesian mindset" and "quality adjusted life years".

The problem with removing the messy, squishy, human part of decisionmaking is you can end up with an ideology like effective altruism: one that allows a person to justify almost any course of action in the supposed pursuit of maximizing their effectiveness.

Take, for example, the widely held belief among EAs that it is more effective for a person to take an extremely high-paying job than to work for a non-profit, because the impact of donating lots of money is far higher than the impact of one individual's work. (The hypothetical person described in this belief, I will note, tends to be a student at an elite university rather than an average person on the street — a detail I think is illuminating about effective altruism's demographic makeup.) This is a useful way to justify working for a company that many others might view as ethically dubious: say, a defense contractor developing weapons, a technology firm building surveillance tools, or a company known to use child labor. It's also an easy way to justify life's luxuries: if every hour of my time is so precious that I must maximize the amount of it spent earning so I may later give, then it's only logical to hire help to do my housework, or order takeout every night, or hire a car service instead of using public transit.

The philosophy has also justified other not-so-altruistic things: one of effective altruism's ideological originators, William MacAskill, has urged people not to boycott sweatshops ("there is no question that sweatshops benefit those in poor countries", he says). Taken to the extreme, someone could feasibly justify committing massive fraud or other types of wrongdoing in order to obtain billions of dollars that they could, maybe someday, donate to worthy causes. You know, hypothetically.

Other issues arise when it comes to the task of evaluating who should be prioritized when it comes to aid. A prominent contributor to the effective altruist ideology, Peter Singer, wrote an essay in 1971 arguing that a person should feel equally obligated to save a child halfway around the world as they do a child right next to them. Since then, EAs have taken this even further: why prioritize a child next to you when you could help ease the suffering of a better1 child somewhere else? Why help a child next to you today when you could instead help hypothetical children born one hundred years from now?2 Or help artificial sentient beings one thousand years from now?

The focus on future artificial sentience has become particularly prominent in recent times, with "effective altruists" emerging as one synonym for so-called "AI safety" advocates, or "AI doomers".a Despite their contemporary prominence in AI debates, these tend not to be the thoughtful researchersb who have spent years advocating for responsible and ethical development of machine learning systems, and trying to ground discussions about the future of AI in what is probable and plausible. Instead, these are people who believe that artificial general intelligence — that is, a truly sentient, hyperintelligent artificial being — is inevitable, and that one of the most important tasks is to slowly develop AI such that this inevitable superintelligence is beneficial to humans and not an existential threat.

This brings us to the competing ideology:

Effective accelerationism

While effective altruists view artificial intelligence as an existential risk that could threaten humanity, and often push for a slower timeline in developing it (though they push for developing it nonetheless), there is a group with a different outlook: the effective accelerationists.

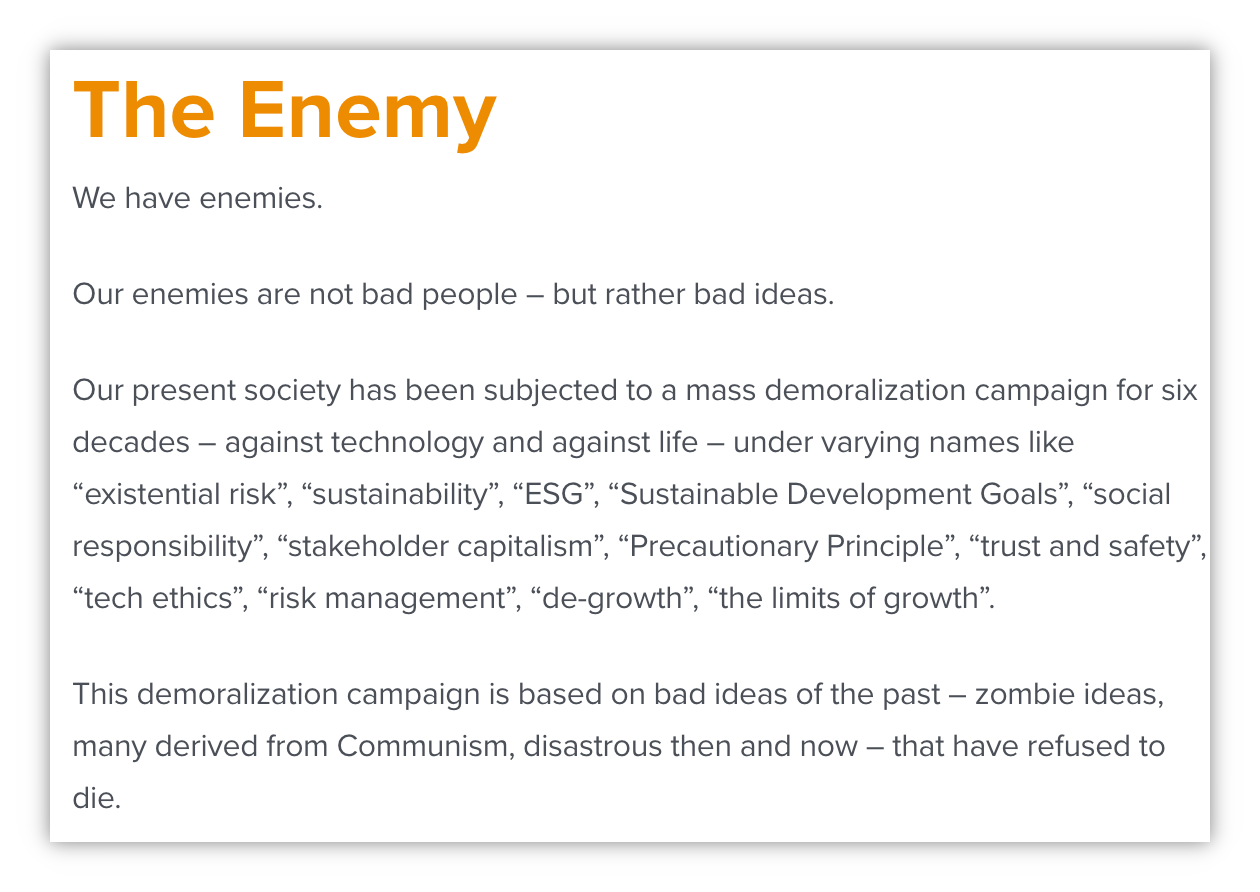

This ideology has been embraced by some powerful figures in the tech industry, including Andreessen Horowitz's Marc Andreessen, who published a manifesto in October in which he worshipped the "techno-capital machine"3 as a force destined to bring about an "upward spiral" if not constrained by those who concern themselves with such concepts as ethics, safety, or sustainability.

Those who seek to place guardrails around technological development are no better than murderers, he argues, for putting themselves in the way of development that might produce lifesaving AI.

This is the core belief of effective accelerationism: that the only ethical choice is to put the pedal to the metal on technological progress, pushing forward at all costs, because the hypothetical upside far outweighs the risks identified by those they brush aside as "doomers" or "decels" (decelerationists).

Despite their differences on AI, effective altruism and effective accelerationism share much in common (in addition to the similar names). Just like effective altruism, effective accelerationism can be used to justify nearly any course of action an adherent wants to take.

Both ideologies embrace as a given the idea of a super-powerful artificial general intelligence being just around the corner, an assumption that leaves little room for discussion of the many ways that AI is harming real people today. This is no coincidence: when you can convince everyone that AI might turn everyone into paperclips tomorrow, or on the flip side might cure every disease on earth, it's easy to distract people from today's issues of ghost labor, algorithmic bias, and erosion of the rights of artists and others. This is incredibly convenient for the powerful individuals and companies who stand to profit from AI.

And like effective altruists, effective accelerationists are fond of waxing philosophical, often with great verbosity and with great surety that their ideas are the peak of rational thought.

Effective accelerationists in particular also like to suggest that their ideas are grounded in scientific concepts like thermodynamics and biological adaptation, a strategy that seems designed to woo the technologist types who are primed to put more stock in something that sounds scientific, even if it's nonsense. For example, the inaugural Substack post defining effective accelerationisms's "principles and tenets" name-drops the "Jarzynski-Crooks fluctuation dissipation theorem" and suggests that "thermodynamic bias" will ensure only positive outcomes reward those who insatiably pursue technological development. Effective accelerationists also claim to have "no particular allegiance to the biological substrate", with some believing that humans must inevitably forgo these limiting, fleshy forms of ours "to spread to the stars", embracing a future that they see mostly — if not entirely — revolving around machines.

A new coat of philosophical paint

It is interesting, isn't it, that these supposedly deeply considered philosophical movements that emerge from Silicon Valley all happen to align with their adherents becoming disgustingly wealthy. Where are the once-billionaires who discovered, after their adoption of some "effective -ism" they picked up in the tech industry, that their financial situation was indefensible? The tech thought leaders who coalesced and wrote 5,000-word manifestos about community aid and responsible development?

While there are in fairness some effective altruists who truly do donate a substantial portion of their earnings and live rather modestly, there are others like Sam Bankman-Fried: who reaped the reputational benefits of being seen to be a philanthropist without ever seeming to actually donate much of his once mind-boggling wealth towards the charitable causes he claimed were so fundamental to his life's work. Other prominent figures in the effective altruism community rationalized spending £15 million (~US$19 million) not on malaria bed nets or even AI research, but on a palatial manor in Oxford to host their conferences.

Similarly, effective accelerationism has found resonance among Silicon Valley elites such as Marc Andreessen, a billionaire who can think of nothing better to do with his money than purchase three different mansions all in Malibu.

With each manifesto, from his 2011 "Why Software Is Eating the World" to his 2020 "It's Time to Build" to his most recent, Andreessen reveals himself as a shallow thinker who has not meaningfully built anything in about twenty years, but who is incredibly fearful of losing grip on where he's sunk his teeth into the neck of the technology industry.

He can see that the shine has worn off on the techno-utopianism that marked some of the earlier days of the industry, and painful lessons have taught investors and the public alike that not every company and founder is inherently benevolent. The "move fast and break things" era in tech has had devastating consequences, and the once unquestioning admiration of larger-than-life technology personalities like himself has morphed into suspicion and disdain.

The threats to his livelihood are many: Workers are organizing in ways they never have in the technology sector. His firm's reputation has been tarnished by going all-in on web3 and crypto projects, a decision even he doesn't seem to understand. The public is meaningfully grappling with the idea that maybe not every technology that can be built should be built. Legislators are sorely aware of their past missteps in giving technology a free pass in the name of "innovation", and are increasingly suspicious of the power of tech firms and monopolies. They're actively considering regulatory change to place limits on once lawless sectors like cryptocurrency, and hearings to discuss AI regulation were scheduled at breakneck speed after the emergence of some of the more powerful large language models.

Despite the futuristic language of his manifesto, its message is clear: Andreessen wants to go back. Back to a time when technology founders were uncritically revered, and when obstacles between him and staggering profits were nearly nonexistent. When people weren't so mean to billionaires, but instead admired them for "undertaking the Hero's Journey, rebelling against the status quo, mapping uncharted territory, conquering dragons, and bringing home the spoils for our community."c

The same is true of the broader effective accelerationism philosophy, which speaks with sneering derision of those who would slow development (and thus profits) or advocate for caution. In a world that is waking up to the externalities of unbridled growth in terms of climate change, and of technology's "build now and work the kinks out later" philosophy in terms of things like online radicalization, negative impacts of social media, and the degree of surveillance creeping into everyday life, effective accelerationists too are yearning for the past.

More than two sides to this coin

Some have fallen into the trap, particularly in the wake of the OpenAI saga, of framing the so-called "AI debate" as a face-off between the effective altruists and the effective accelerationists. Despite the incredibly powerful and wealthy people who are either self-professed members of either camp, or whose ideologies align quite closely, it's important to remember that there are far more than two sides to this story.

Rather than embrace either of these regressive philosophies — both of which are better suited to indulging the wealthy in retroactively justifying their choices than to influencing any important decisionmaking — it would be better to look to the present and the realistic future, and the expertise of those who have been working to improve technology for the better of all rather than just for themselves and the few just like them.

We've already tried out having a tech industry led by a bunch of techno-utopianists and those who think they can reduce everything to markets and equations. Let's try something new, and not just give new names to the old.

Footnotes

Importantly, there are many (often highly contradictory) definitions of what constitutes "AI safety", and the vast majority of people who care about responsible development of AI are not effective altruists. ↩

People like Timnit Gebru, Alex Hanna, Margaret Mitchell, or Emily M. Bender. ↩

I must at this point remind you that this is a man who built a web browser, not goddamn Beowulf. ↩

References

Effective altruism has a long history of deeply troubling, eugenicist ideas about who exactly the "better" humans are. ↩

This is a view known as longtermism. ↩

A term he credits to Nick Land, whose work he also suggests at the bottom of his manifesto in a list of "Patron Saints of Techno-Optimism". Land has explicitly advocated for eugenics and what he terms "hyper-racism". ↩